Algorithm to Allegro

I'm a prolific collector of hobbies. They're largely all creative in some instance; I've played guitar since I was a teenager, and written fiction for as long as I can remember. From those I've learnt music production, created a hyperlocal news site, took on social media stints for startups, made tiny robots using Arduino and Raspberry pi.... anyway, you get the idea. So, while I'm on a career break, I decided that I'd really like to take up piano.

Bear in mind, while I have some elements of a musical background, I can't really read music, and I've never played anything similar to piano. I'm a middle aged man, entirely starting from the beginning.

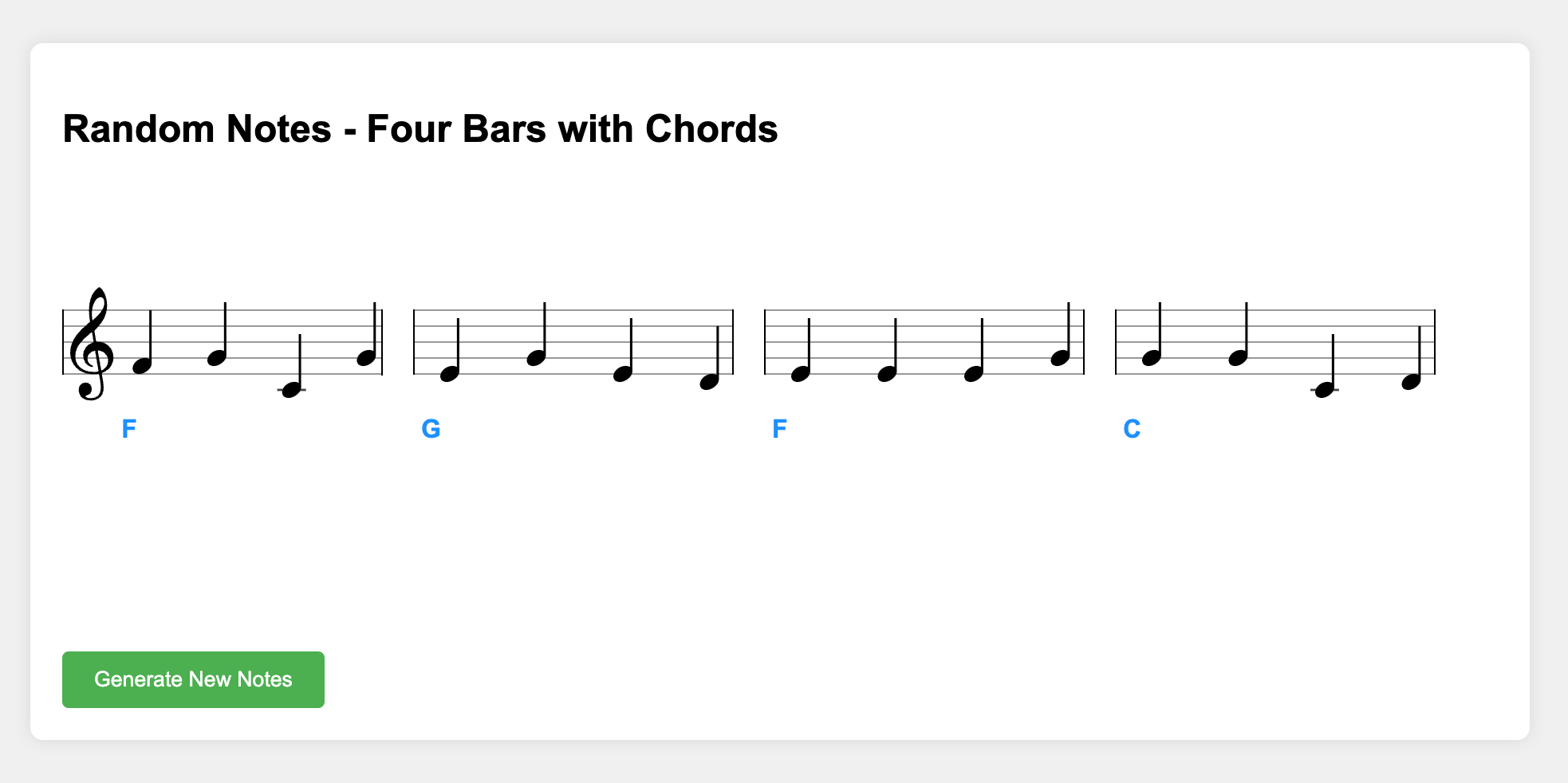

One of the things my tutor gave me to work on is sight reading simple chord and melody pieces. It's quite hard to practise sight reading though, because after a while you've memorised the piece you're playing. At that point, you're no longer sight reading, you're going through rote memorisation and muscle memory.

I decided to try out Anthropic's Claude LLM to generate unique, simple piano pieces for me to practice sight reading. Each note needed to be randomised between the handful I was learning, and the chords needed to be one of three that we'd established as a base.

You can find the HTML source for the app here

One of the notable things I found when prompting the LLM is that it was better to try and start simple and iterate on the results rather than trying to do everything at once.

This was my first attempt:

create a simple web application that displays music notation for piano. The chords should only be either C, F or G, and the notes should only be C, D, E, F or G.

The application should generate 8 bars of music randomly based on the constraints described, with the displayed information changed every time the user refreshes the page.

This never seemed to work, no matter how much I prompted Claude to fix the various errors. After a few minutes of trying to figure out what was going wrong, I tried a different tack.

make a simple app using vexflow to that displays a random sequence of notes. The notes should only be one of C, D, E, F or G.

Building from a simple start like this seemed much more productive rather than asking for multiple bits of functionality from the outset. I still ran into bugs as I introduced additional elements, but Claude was able to resolve them without too much difficulty.

I've seen other people using Claude and the new 'artifacts' feature to generate some interesting things, and it really feels closer to working alongside somebody now. However, there's still a feeling that the LLM is a human enhancer rather than something that can act on its own. If I didn't have a technical background, I'm not sure how I'd deal with things like errors being shown in the artifacts console (although, to be fair, simply copy and pasting them tended to work fine).

While AI assistants like Claude with features such as 'artifacts' are undoubtedly advancing the field of human-AI collaboration, they still have some way to go before becoming truly autonomous agents. The experience of working with these tools is increasingly akin to having a capable assistant, yet they remain fundamentally human enhancers rather than independent actors, with some level of expectation of knowledge in the areas being worked in. The future of AI assistance looks promising, but it's clear that human insight, creativity, and problem-solving skills will remain integral to the process for the foreseeable future.